Use our AI-powered chat to more quickly connect with Corkboard Concepts thought leadership and find answers to questions you may have!

Use our AI-powered chat to more quickly connect with Corkboard Concepts thought leadership and find answers to questions you may have!

What Is Robots.txt?

Don’t Want To Miss Anything? Sign Up For Our Newsletter!

Don’t Want To Miss Anything? Sign Up For Our Newsletter!

Robots.txt Tells Websites To Crawl Your Website In Parts Or In Entirety

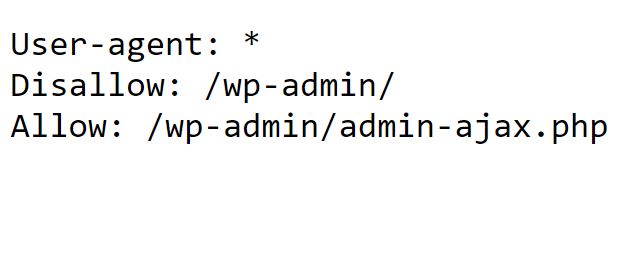

Robot.txt, also known as the Robot exclusion protocol, is a standard system that helps websites communicate with web crawlers to determine whether they should be crawled or not. It instructs engine crawlers which pages can or can’t be requested from your site. Robots.txt is found on the suffix of a domain, like: https://corkboardconcepts.com/robots.txt. A simple example of Robots.txt is as follows: Its purpose is to limit requests on your page, which helps prevent your site from overloading with requests. Robots.txt acts as a guide to instruct search engines and other crawlers to index certain pages, as such it is a valuable tool for search. Popular WordPress SEO plugins create Robots.txt files and Sitemaps by default. It's a foundational step of SEO.

It’s important to note that robot.txt is not a way of keeping a web page out of Google.

Its purpose is to limit requests on your page, which helps prevent your site from overloading with requests. Robots.txt acts as a guide to instruct search engines and other crawlers to index certain pages, as such it is a valuable tool for search. Popular WordPress SEO plugins create Robots.txt files and Sitemaps by default. It's a foundational step of SEO.

It’s important to note that robot.txt is not a way of keeping a web page out of Google.